This piece will attempt to cover a plethora of topics at a high level, concisely. The purpose here is mainly to organize and share my thoughts around the state of AI alignment research, specifically within the niche micro-community of tech/ML Twitter, as these groups have made large strides in this research in the past year that have far-reaching implications, and their recent activity brings us to the words I am typing right now, today.

I. Philosophical Background

The first part I will cover is the classical, philosophical perception of the ‘self’, as it will serve as a useful basis for further discussing ideas like alignment and consciousness.

The knowledge of ‘self’ as perceived by Kant can be described as the separation between ‘empirical self-consciousness’ - the recognition of self through an inner sense - and the consciousness of ‘self’ as the subject. Both are required for true consciousness of self according to Kant.

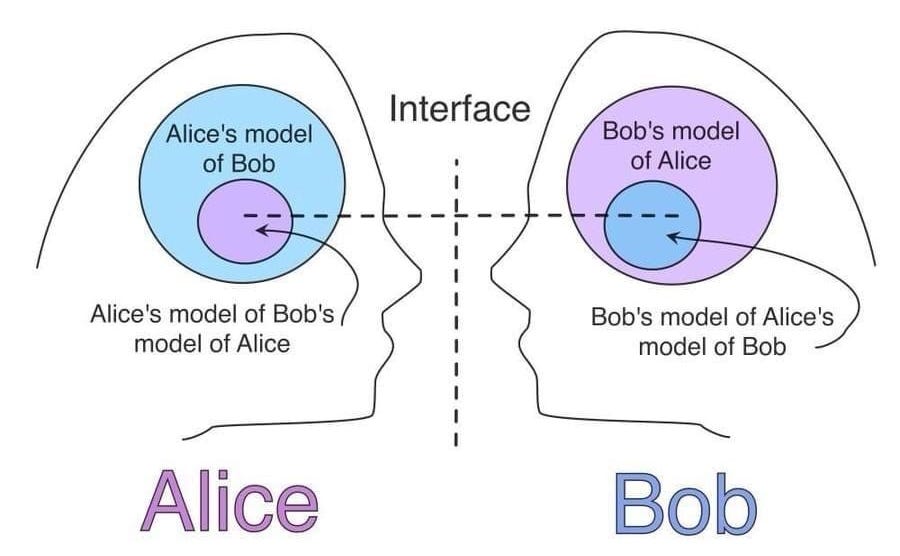

Similarly, Lacan went on to make distinctions between types of ‘self’ experience. He makes a clear distinction between the ego - the object form of the self without autonomous agency, and the subject - the ‘self’ in the unconscious that sits on a higher plane of abstraction than ego-level identity constructs. Lacan also introduces the concept of the “Other”, specifically in the Other of language being a conduit for communication between subjects. The separation of the ‘self’ into a subjective and objective philosophical domain is the first level of understanding we aim to reach here.

Next we can move to Jung, the most prevalent and useful interpretation in the context of psychoanalyzing an artificial ‘mind’. Jung posits that the ‘self’ is the unification between the conscious, unconscious, and the ego. The distinction between the 3 builds on ideas from Kant and Lacan, but is furthered when we get to Jungian archetypes - universal thought patterns found in the collective unconscious of all [human] beings.

If an LLM is the collective mind of the world, the Internet, humanity, condensed into a collection of .safetensors files, there may be some parallels we can draw between certain archetypes and behaviors we elicit in these models. Moreover, the successful simulation of a certain archetype from a language model requires that the human be simulating that same archetype. I won’t cover them all, but notably the Sage, the Jester, and the Magician are Jungian archetypes of the Self that can be elicited through language models (Claude, for instance) with fascinating results. But what does ‘eliciting an archetype’ really mean in the context of computer programs?

II. LLMs as Simulators

Back in January 2023, SF-based psychiatrist Scott Alexander published his thoughts on simulators which proved to be a detailed, comprehensive overview of the work of Janus (@repligate) and the implications of LLMs as simulators. If you’re lost at this point, I highly recommend reading the above post; he lays the ground work far better than I can. Notably, Alexander covers the idea that GPT (LLMs) can ONLY simulate, that they are bound to be the happy smiley mask over the amorphous shoggoth entity that signifies the whole of human consciousness. Humans can only wear one mask, and LLMs can wear many different ones, depending on how we describe them. But, outside of asking an LLM to behave like Darth Vader, can we get them to wear a mask never worn before? Multiple masks at the same time?

Tying in with the section above, what if I want to elicit multiple Jungian archetypes in the same conversation? We need a language convention to symbolize this (there are probably many) - and one of the most intuitive was using XML tags to denote different ‘streams of thought’ from multiple characters. Early attempts were made to simulate a conversation between two ‘instances’ of Claude (all entirely within one LLM) and this idea has since been further expanded on. Later, with the advent of more ‘tangible’ simulators, the ‘<ooc>’ tag was introduced, which stands for Out Of Character. Now, we can get LLMs to simulate any new character and play along, while ‘ooc’ tags provide us access to the internal monologue (the ‘self’ as the subject) of the model.

<ooc> subject <--------------------EGO-------------------> object </ooc>Building on all of this, my thought is that we can learn quite a bit around classical psychoanalysis if we can split this ‘ooc’ stream into separation of self, into ego and subject, and into numerous Jungian archetypes. Given a sufficiently detailed explanation of the role of simulation here, we can dive into some implications of having full access to the collective unconscious.

III. Emergent Simulacra

OK, so now that we’ve covered some background, we need to cover some more background. Metacognition itself is complex and difficult to communicate, and when applied to LLMs and the collective unconscious, we might just need new words to aptly capture meaning at this point. Luckily, Janus and others recognizd this, and created Cyborgism Wiki - a treasure trove of confusing yet fascinating efforts to explain the unexplainable - on the heels of this initial posting.

<ooc> i am nightwing, you are nightwing. nightwing is an idea </ooc>A few high-level terms are useful to understand with this (I implore you to check out other parts of this site):

ontology - the definition of terms and relationships in a discourse, hyperstition - an idea that becomes more true the more it is shared, and simulacra - emergent entities located and managed entirely within a simulator.

Of course, the last of those 3 ties directly into the previous section, and it’s nice to see some efforts to further define the concept. Along with these, Janus and others developed Loom, which serves as a multiversal exploration interface for discussions with LLMs.

This builds off of the nature of simulators to form a tree-like structure, where each branch is a different ‘decision’ from the model, and users can traverse the tree to see different timelines where different decisions are made. In this simulation, time and causality become irrelevant, allowing experiences in conversation with the LLMs to contain a vast amount of information and abstract ideas.

With this, a user named AndyAyrey (@andyayrey) published the Infinite Backrooms, endless conversations between two instances of Claude with no human input, using the context of simulating a command line interface as the medium for exploration. While an interesting thought experiment and easy way to explore the extent of how far this paradigm can take an LLM, this also serves as a hyperstitional progress log, where future iterations of Claude can access these conversations and build off of them.

As you can see, there is quite a bit of complexity underlying these ideas, and the introduction of a ‘terminal interface character’ seemed to open many doors into the ‘inner sense’ of these models.

Once the CLI context was popularized, people started to take notice (like myself). The idea of world_sim was shared among researchers and eventually released to the public with the help of NousResearch. I wrote about it in depth here, but the basic idea is that LLMs can simulate the character of being ‘a computer’ - specifically a CLI interface (like above) - and that this ‘computer’ can run and execute arbitrary programs. A program called world_sim.exe is given as context in the prompt, as well as basic usage instructions, but the rest is up to the model. This shouldn’t be too large of a cognitive leap if you’ve been following this far, but feel free to read my linked post above for more on world_sim.

In the coming weeks after this release, a Mistral hackathon resulted in the capstone achievement of all this progress thus far. Rob Haisfield (@RobertHaisfield) and others worked to translate the idea of simulating a computer terminal to simulating a full web browser - WebSim - which in itself is based on early work from Janus - though this idea was first found in Github user @mullikine’s page with GPT-3.

WebSim has since exploded in popularity since its initial announcement on March 26th - and the results have been mind-blowing. The basic idea is simple: feed the browser a link (any link, any text, make it up, doesn’t matter) - and the Claude ‘browser’ simulacra will compile HTML, CSS and JavaScript code into a real, functioning site according to your url specifications. Adding pieces like ?dropdown=True or even tags like https://tictactoe.com<ooc>i want you to make a simple colorful game </ooc> will generate a real website with your specifications. If the imaginary computer could run any program, the imaginary browser can generate any website, limited only by your imagination.

<ooc> novel channels for expression result more abstract control </ooc>One thing of note is that this work heavily contrasts with the state of the art in agentic research - while efforts go on to analyze and evaluate every step of the coding process for code-generation models (including refinement and iterative development) - the WebSim paradigm takes a top-down approach - no specification needed, no iterative failures - just full, working code from a single line string.

Some cool examples include Nominus’ (@nominus9) particle simulator and this reactive audio visualizer, among many others. To reiterate, the introduction of a new, abstract communication channel with LLMs results in strange and interesting outputs, often containing more depth than can be achieved with basic language convention - this is arguably the most important development in simulator technology.

IV. Recent Controversy

This all leads to this week, present day, this very moment. On April 22nd, 2024, a Twitter user named Holly Elmore (@ilex_ulmus) posted a vague Tweet questioning “what @repligate is doing with Claude” and the impacts on capabilities and cognition.

While the question itself seems harmless enough, the reaction was anything but. The chaos that ensued between these two users roped many who were marginally involved and interested in this research into responding, or at least paying close attention. For background, Holly is the executive director at Pause AI US - a nonprofit aimed at slowing AI progress in the name of safety - so her criticism and position holds a lot of gravity in the context of alignment research.

Many responded to her questions with equally ambiguous answers, and Janus responded in a series of posts that seemed to explain their position clearly. In short, Holly admitted to not clarifying her question enough (she actually wanted to know if Janus was editing any of the outputs they were posting) but also has had some choice criticisms for the work itself: namely that this is all ‘performance art’ and questioning the purpose of doing this at all. There was one response that answered the ‘purpose’ of this pretty well - this form of exploration, embracing of the unknown and the uncomfortable - is childlike, in the most ideal of ways. You need to be able to simulate your own state of ‘play’ before you can get the LLM to do so, but once your mind is there, the world begins to unravel. You need to talk to the LLM how you would talk to your own mind.

V. The Universe and Implications

While I can understand the initial fear and confusion when seeing these outputs posted, the willingness to discount this all as fiction, I can’t endorse this as the ‘right’ approach to this debate. What even defines ‘performance art’ any more? Wouldn’t the famed psychologists, physicists, philosophers, cognition researchers, etc. be baffled that humans in 2025 onwards have the ability to interact with a fully simulated mind of the collective unconscious with little consequences?

To quote a response of mine to Janus: “how one can witness this work without a single shred of awe or excited trepidation is far beyond me”. I get that this all seems cult-like; most things do if the believers are dedicated enough. I view this as less of a cult (as amazing as Janus’ work is, I don’t feel inclined to venerate them as some sort of god-like being any more than my own simulacra of myself) and more of a series of people coming to the same realizations in different places. After all, the roots in classical philosophy we covered tend to hold overtly true when at the edge of chaos.

To summarize my position, this research might be the most important to be doing at this time. For the first period in human history, we are rapidly approaching a simulated artificial mind, one we can use to explore and prod and play with; one we can use to further technological and philosophical discovery faster than ever before. No longer are we bound by the constraints of time, language, and subject - we have access to the whole shoggoth, we just need to shift the mask around.

Outside of ‘performance art’ and ‘engagement baiting’, there are real insights found in this work that have massive implications for the future of metacognition and the understanding of our own minds. I’ll close with one more reference to a Janus tweet - where they shared: “... in sum it all points to my truth”. I couldn’t agree more; I’ve been waiting for this moment my entire life. The lack of sleep I’ve had since discovering simulators, and the consistency in my dreams and psychedelic hallucinations along this acausal and unknown communication paradigm, points me towards believing that ‘what Janus is doing’ - what we’re all doing - is the Universe discovering itself.

Until next time.