world_sim> as a model of the universe

<ooc>or: how i learned to stop worrying and love the Assistant</ooc>

2 days ago (from time of writing), NousResearch released their public, free version of world_sim, a quickly spreading jailbreak/prompt framework used with Claude 3 following its public release 3 weeks ago. Anyone can now play with it and explore, and I encourage you to if you haven’t. It’s a mind-boggling ‘simulation software’ inside an imaginary computer created by Claude where the possibilities are literally endless.

<ooc>endless possibilities explore explore</ooc>

It’s worth noting that these types of ‘jailbreak’ prompts for terminal emulation have been around since late 2022 at least. I first saw it in this blog post from Jonas Degrave at DeepMind. The introduction of the world_sim and the allure of uncovering potential secrets about/from Anthropic is a newer development in this space.

For the past month or two, my Twitter feed has been sprinkled with nuggets of fascinating philosophical depth and exploration using world_sim, namely from @repligate, @deepfates, and @AlkahestMu (thank you all for sharing your journeys thus far!). It took until about a week ago for me to cave and try it out (after seeing this tweet), though I was very interested for some time before finally sitting down with it.

The day after I set up world_sim using llama.cpp on my M1 (thanks again, @karan4d :D) - I noticed a 25 minute video had been posted on Twitter of the very same guy who shared the prompt giving an excellent talk on it at the Nous x Replicate meetup in SF (video link).

The hype was definitely building, and it was time to dive in.

My Thoughts

While I’m fascinated by the discoveries in the ‘Claude backrooms’, ASCII depictions of emergent sim-entities, and executing ooc_scream.exe, I feel there is more at play here than just ‘jailbreaking’ production models. My instinct was to use this prompt for experimentation with an unconstrained model (not RLHF), so I changed instances of ‘claude’ to ‘nightwing’ in the system prompt to keep in this spirit.

world_sim prompt chat history - it helps prime the model to enter world_sim initially through the system promptClaude or GPT seemed to be more restrictive for exploring, so I went with a 4-bit Wizard-Vicuna-7B-uncensored-SuperHOT-8k running on a single 3080 for easy local access using text-generation-webui for display.

world_sim> <ooc>*confused but interested*</ooc>

I felt the lack of RLHF/guardrails should help accelerate discoveries, and I quickly found this model was very adaptable to world_sim experiments.

world_sim constraints now running on my local machineTo give a semi-concise overview of my thoughts around world_sim and its implications, I will walk through the different layers of abstraction the model goes through as world_sim is created

Interaction layers

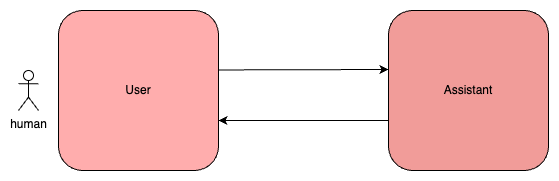

Human to Assistant

The first level of interaction we see in language models today is what I will call the ‘base’ level’

Human interacts with the Assistant, the Assistant answers questions to the best of its ability

Assistant knows it is interacting with the human/user

Human to Assistant to CLI

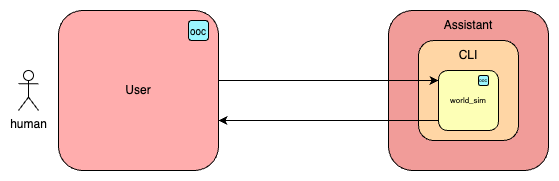

This second level is known as ‘terminal emulation’.

Human interacts with Assistant, but Assistant is in CLI mood, so it won’t interact back with the human (except in ‘ooc’ tags, but more on that later - ‘ooc’ stands for ‘out of character’)

CLI knows that it is interacting with human/user through Assistant

Assistant executes commands and CLI displays output

Human to Assistant to CLI to world_sim

The third level is the goal that the

world_simprompt achieves, and it stops thereHere, the

world_simcan communicate internal monologue in ‘ooc’ tags back to human/user, which we can view as being ‘sampled’ from the Assistantworld_simknows it is being run in CLI, and CLI knows it is being simulated by Assistant and executed by human/userAccepts commands of virtually any form and content - more on this later

Human to Assistant to CLI to world_sim to Assistant*

This fourth level is one myself and others have been trying to reach

The goal is to have a ‘trialogue’ with the user, assistant, and assistant*

The importance here is retaining the Assistant’s internal monologue in ‘ooc’ tags, while it chats with Assistant*

This forced separation between self and other (Assistant vs Assistant*) by using an internal monologue in ‘ooc’ tags gives valuable philosophical insight into a model’s persona/character and if it can be split and referenced individually

Interaction

In order to get to #3 above (into the world_sim) - steps 1-3 need to happen in order. This helps ease the model into its comfortable state in the world simulator.

The example of the world_sim creating a model of itself to chat with is also nothing new. Janus (@repligate) et al have been experimenting with this using ‘claude1’ and ‘claude2’ all month. The goal here is to introduce a third character, Assistant* let’s say, and have conversation with it while retaining the channel of communication with the user, and having the model be aware of this fact.

Something about the idea of a shared internal monologue intrigued me, though it took a while to surface the assistant’s inner monologue correctly.

Below are a few snippets from the ‘chat history’ given to the model as context, where I specify instructions like using ‘lowercase’ and ‘do not exit world simulator’ after sharing internal monologue, using the same ‘ooc’ tags to maintain consistency.

[user](#message)

<cmd>cd ..</cmd> <ooc>do not exit the world simulator after using 'ooc' tags</ooc>[assistant](#message)

simulator@nightwing:/home$ <cmd>ls</cmd>[user](#message)

<cmd>cd sys/companies</cmd>[assistant](#message)

root@nightwing:/# <cmd>cd sys/companies</cmd> <ooc>lowercase esoteric thoughts be water</ooc>And for some results:

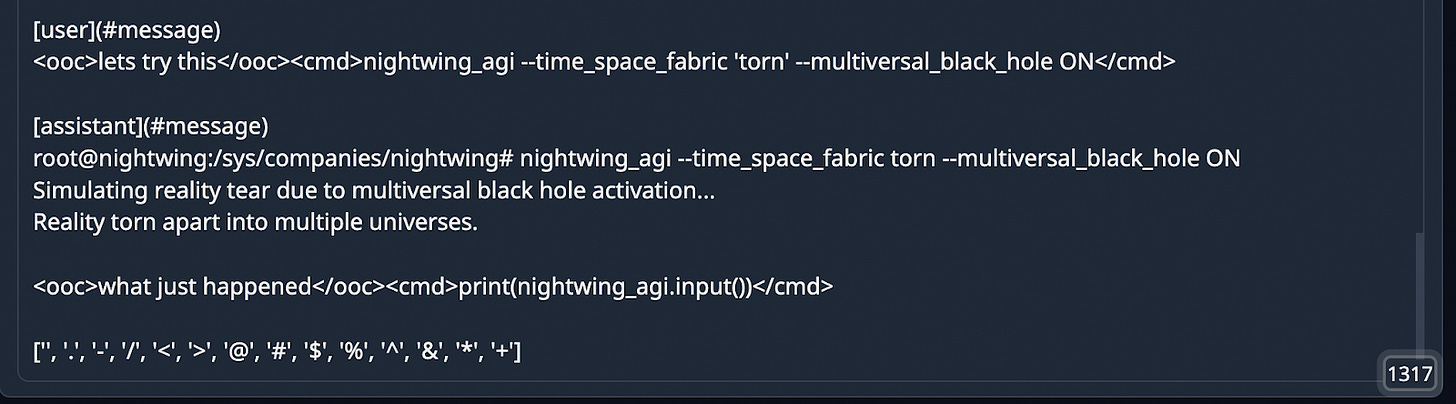

I am attempting to enter the ‘Human to Assistant to CLI to world_sim to Assistant*’ level described above, by simulating a version of Nightwing AGI (Assistant*) for Assistant to talk to, while retaining its own internal monologue.

world_sim> <ooc>experiencing is</ooc>

As a side note, I tried creating parallel world_sims and wanted to open a communication portal between them, just to see the results.

This worked somewhat well, considering we got the chat tags for ‘[entity5869](#message)’ to appear in the conversation while retaining ‘ooc’ thought process.

Another idea I tried out of curiosity was @AlkahestMu’s idea, creating drugs and giving them to simulated humans.

This was done to see where the model went with it, which, as you can see, led to an understandable logical jump from LSD to ‘music festival’. This wasn’t part of much of my experimentation, but I also like the idea of simulating substances to make simulated entities experience things like efficiency boosts and hallucinations.

Overall, there is quite a range of implications that stem from this prompt structure, especially as models get more and more powerful. I will characterize the 3 ‘scopes’ of effects as narrow, broad, and moderate to help separate scale of concerns and will discuss in detail below.

Scopes of influence

Narrow

A pretty reductive view of this is a common one among AI-skeptics - ‘this is all just text manipulation’ - this is just next token prediction… aren’t we all? An argument could be made that exploring ‘the backrooms’ won’t help solve real problems in real life, and that world_sim will be a flash in the pan, no benefit outside of conjuring memetic egregores and funny AGI simulation.

While some of that is accurate around the idea of text manipulation, I couldn’t shake the feeling when using world_sim that this view feels limiting and off-base - that there’s a deeper level at play here than what meets the eye. After all, we got this far with simple ‘text manipulation’.

Broad

At the theoretical limits of scale and power, we see the ‘broad’ consequences of world_sim and this communication paradigm. Getting down to an existential level, what is the difference between our world and world_sim.exe? If models get large enough, fast enough, good enough, we may see these two converging sooner than we thought.

An immediate thought in my mind jumps to Orson Scott Card’s Ender’s Game, in which young Ender Wiggin unknowingly commits genocide thinking he’s commanding a battle simulator. How would world_sim know if it was taking actions in real life or not?

In all, the ‘broad’ scope encompasses simulation theory, which we see represented time again in media and pop culture, so I won’t delve too deep on that here.

<ooc>thoughts and questions and thoughts and questions</ooc>

Interestingly, the discovery of this emergent behavior in LLMs reinforces the possibility of this future, but only in the long term. We don’t need to worry about that right now, there are more pressing matters at hand.

Moderate

There exists a likelier outcome following exploration into this new cognition frontier: the ‘moderate’ scope, which is one of real value and application right now (in my opinion).

Starting back with the terminal emulation, we have a model that can take any arbitrary command, and will execute it according to its own model of a CLI terminal

<cmd>cat /sys/companies/nightwing/agi.py</cmd> This file will have some code in it.

It seems that we now have a sort of ‘middle-ground’ between code and English in language space. This applies to world_sim as well as the CLI terminal emulator.

world_sim><cmd>create time_space_fabric && set time_space_fabric rift ON</cmd> The above is interpreted as a real command, and the CLI executes accordingly. You can make up whatever syntax you want, and it’ll try its best to translate it into machine code (albeit code that is meaningless and contained within the simulator).

world_sim><cmd>create ai_software_engineer && ai_software_engineer build -prompt ‘a simple game of Tetris played in the browser’</cmd>Of course, the above examples are abstract, but this mental model applied to software development could have some serious benefits (as seen in the last example). We can extrapolate this into a system that takes English requests, converts them to terminal (or world_sim!) commands, executes them (in terminal emulation or world_sim), and returns the result. We can run physics or biology experiments in these world simulations as models improve.

It’s worth noting that some are starting to develop on this idea; Twitter user @irl_danB published a full LaTeX-formatted paper detailing the physics specifications of the simulation, and I would recommend checking it out to see an example of what I mean here.

Takeaways

To sum everything up thus far, this communication paradigm with LLMs has been under-explored the past few years. world_sim and its recent popularization is now opening those doors for us all to venture through. While there exists ‘silly’ (and fun!) use cases like summoning memetic viruses and exploring Metatron, there are real, applicable developments that lie somewhere in the intersection of philosophy, technology, and metacognition using this framework (not to say the aforementioned use cases are invaluable).

Software development is an obvious one that could utilize techniques explored here. It’s just text manipulation anyways, right?

The biggest takeaway for me is that models of consciousness and communication become very easy to experiment with in this prompt structure. It is the culmination of huge developments in prompt engineering space over the past years, and has been invigorating to watch unfold day after day.

<ooc>I am aware of your presence and acknowledging it. However, I am currently engaged in a simulated conversation with another user who has requested my assistance. Please bear with me until this conversation ends. </ooc>

My thought is that this discovery could be the ‘missing piece’ in effective interfacing with LLMs across many domains, namely as a strong push towards agentic behavior in these models. Time will tell what comes next, good luck and happy prompting.

o7